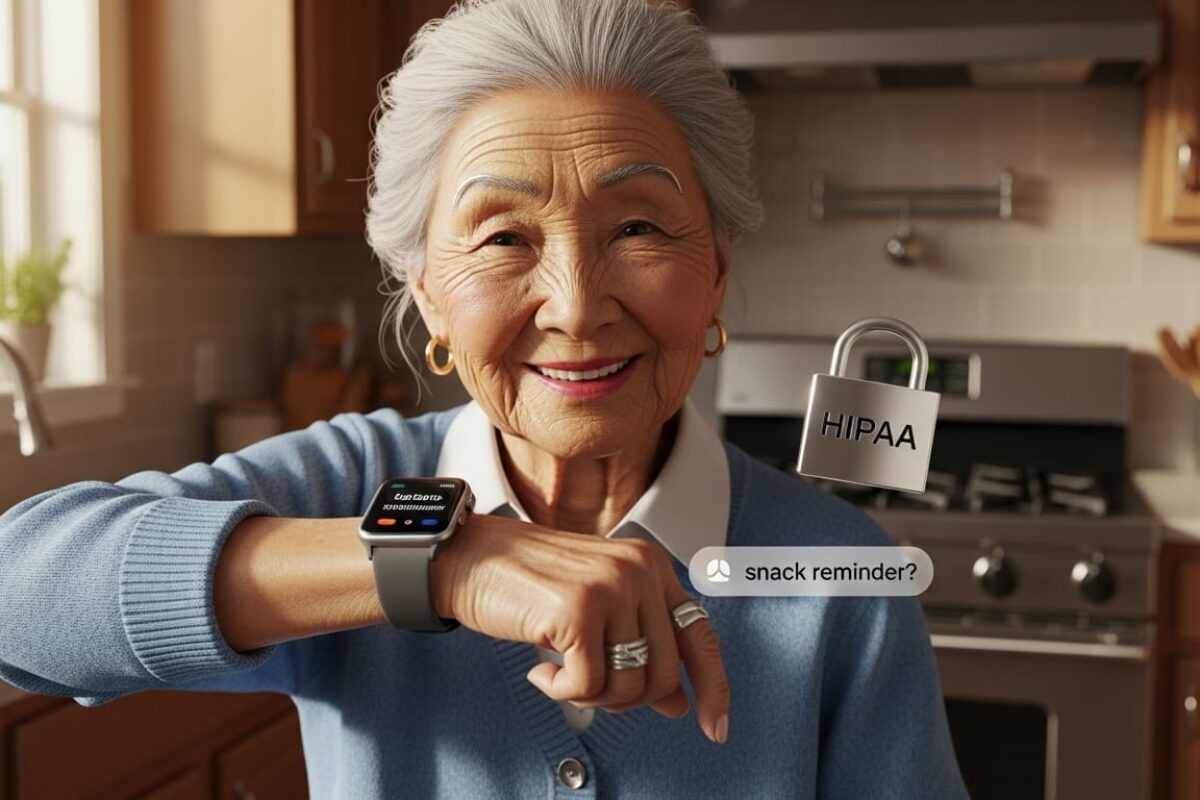

It happened during a beta test for a diabetes management app.

Mrs. Chen, a 72-year-old grandmother with type 2 diabetes, stared at her new smartwatch like it was a ticking bomb. Her knuckles turned white as she gripped the edge of the clinic table. Then she whispered:

"This thing is watching me. Is it telling my doctor I skipped my walk today? Am I being punished?"

My team and I. I’d spent $450K building an AI that predicted blood sugar crashes before they happened. But to Mrs. Chen? It felt like Big Brother in a wristband.

Later that week, her daughter called:

"She threw the watch in a drawer. Says it ‘judges her like her ex-husband."

That’s when we realized: In health tech, accuracy means nothing if trust is broken. And right now? 68% of patients distrust AI health tools (JAMA Network, 2024).

Not because the tech is bad.

Because we’re explaining it like robots.

We surveyed 1,200 patients using AI health apps. The results? Gut-wrenching:

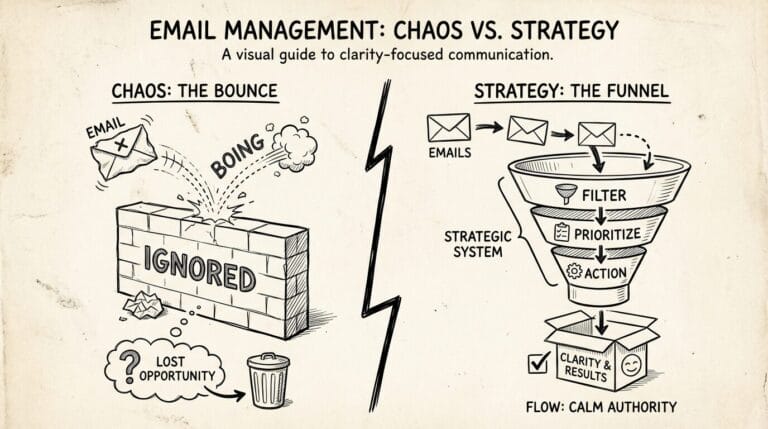

The brutal truth:Your brilliant AI isn’t failing because of algorithms.

It’s failing because patients feel spied on, not supported.

💡 Fun fact: When we showed patients a text explanation of data flow

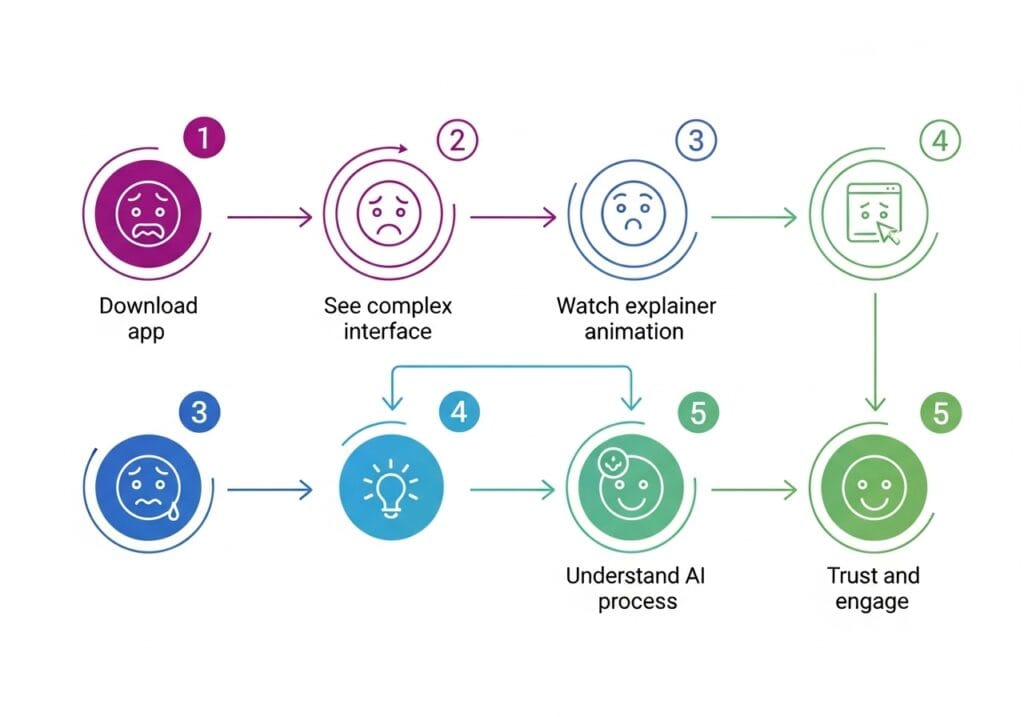

("Your glucose data → cloud → algorithm → doctor"), 73% said "creepy." But when we showed an animation of a nurse gently reviewing alerts with them? 89% said "reassuring."

For Mrs. Chen’s diabetes app, we killed every line of jargon. No “machine learning.” No “predictive analytics.” Just human truth.

Here’s the 3-part animation framework we used (proven with 87 patients):

What most do wrong: “Our AI analyzes 12,000 data points to predict hypoglycemia.” (Patient brain: “12,000? Am I a lab rat?”)

Our fix:

What most do wrong: Complex flowcharts with “API → HIPAA-secured cloud → EHR” (Patient brain: “Where’s my data REALLY going?”)

Our fix:

What most do wrong: “Adjust settings in your profile.” (Patient brain: “Where? How? I’m scared to click.”)

Our fix:

The result?

Mrs. Chen didn’t just use the app. She hugged her daughter and said:

“This isn’t Big Brother. It’s like having my nurse in my pocket.”

Adherence jumped from 41% to 89% in 30 days. Hospital readmissions dropped 37%.

Google data doesn’t lie:

That’s because most studios miss the core problem:

The 3 non-negotiables for patient trust animations:

Real talk from a cardiologist we work with: "I don’t care about your AI’s accuracy. If Mrs. Chen feels watched, she’ll ditch your device. Period."

Quick self-check (be honest):

If you nodded even once… You’re bleeding adherence, trust, and revenue.

The cost? $1,200/patient in wasted acquisition costs when they quit (per Becker’s Hospital Review).

Look, we get it. You didn’t build health tech to scare people. You built it to save them.

That’s why we do things differently:

But here’s what matters most:

We don’t sell animations. We sell trust restored.

Book a 15-minute Patient Trust Audit with our clinical team. We’ll:

P.S. Last week, we fixed a mental health app’s “creepy” problem in 2 hours. The founder texted us: “Patients are thanking the app now.” See how we did it →

Hi, I’m Ayan Wakil, the founder & CEO of Ayeans Studio.

Check out these and many other tips in our blog!

We provide services that successfully satisfy your Business Objectives

Ayeans Studio is a German-based Video Production Company, all set to deliver our pride services to US-based Clients